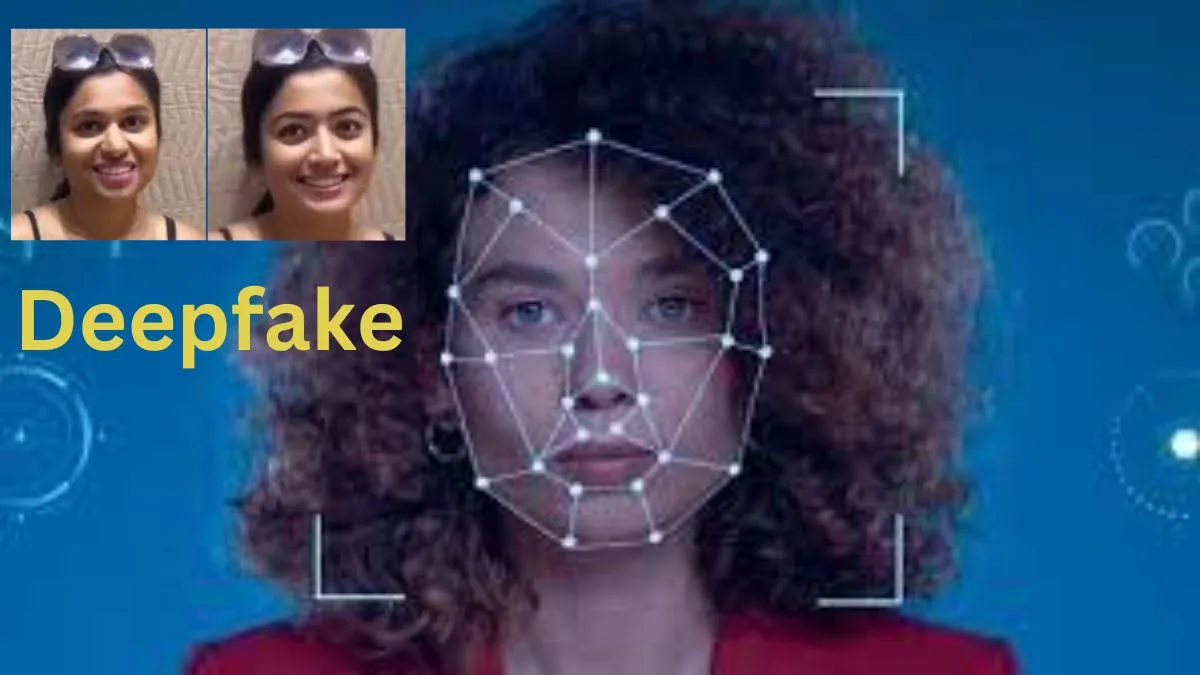

Rasmika mandana (animal movie fame) explicit deep fake video surfaced. Deepfake Ai Videos are made and sent to them or their parents, And they are blackmailed, and money is demanded. Kim Kardashian, and Emma Watson, couldn’t even prove that those videos were not real.

Deepfake Videos increasing Day by day

Be careful! In the small city of Almendralejo in Spain, There were 28 girls from a school. Deepfake videos were created using their AI Turned into explicit content, and sent to them. The matter spread everywhere, and now the lives of those girls have been ruined. AI has destroyed the lives of many girls by creating deepfake videos and continues to do so.

For those who don’t know, deepfake videos involve a face swap through AI And it is not very difficult. There are thousands of applications in the market That can change your face to someone else’s, and women are becoming the target. You know there are 244,625 deepfake explicit videos in the market at this time, out of which 90% are of women/girls.

According to Wide News, female students receive deepfake videos almost daily, And their numbers are tripling every month, seven times a year. You can see how dangerous the human mind is becoming with the help of AI Ruining the lives of girls. The 28 girls, along with many others, have deleted their Facebook and Instagram accounts.

Deepfake videos of girls suffering from the disease

they have developed persistent depressive disorder. This means that they are not settled in their lives. They have a disorder where, when they go out, they feel that people have seen their videos And they are being judged by those eyes. So, they avoid social events, and celebrations, and stay at home. They have dropped out of school.

In this way, their lives have been ruined. AI hasn’t developed to the extent that it can’t be detected yet, So such a blunder is happening here.

How are Deepfake videos destroying girls’ Lives?

Girls are ruined in three ways-

- First, their deepfake videos, explicit videos are made and sent to them or their parents, And they are blackmailed, and money is demanded.

- Second, their videos are posted on the internet, On platforms like OnlyFans, and they earn from there.

- Third, there are some groups on Telegram Where useless, despicable people like such deepfake videos of girls they know, They pay for it, and say, “Yes, make her for us, and we will watch their videos.

Furthermore, this isn’t only happening on Telegram; it’s happening on Google, Facebook, Instagram, and a lot of other websites. The worst part is that, except for the Netherlands, in any other country, there is not even any strict law related to creating deepfake videos, And even if one is enacted, applying it is extremely difficult.

Many social media platforms ban Deep Fake videos

Deepfake videos are developing very rapidly, Google, Facebook/Meta have also said that we are against deepfake videos, And we have implemented an algorithm where we will not spread deepfake videos at all. But the amazing thing is that, according to NBC’s investigation, something else is being observed.

On Instagram, ads promoting deepfake videos are appearing. And what can you expect from Instagram? Here, sex workers, OnlyFans, they are promoting themselves. It has become a soft porn itself. If they stop deepfakes, their business will come to a halt. It’s like entrusting the guardianship of Jalebi to a dog.

Is Deep Fake Ai dangerous?

Now some people think that AI is very dangerous, it shouldn’t come. AI is not dangerous; the human brain is dangerous. This filth is in the mind of the person. If you use a knife to commit murder, it’s not a mistake of the knife. AI has many benefits; it is used for entertainment and education, And it excels in various fields like painting, and creating new jobs.

So, the mistake is not with AI; it’s the mistake of the human brain. We always blame technology, and always perceive women as objects; Misogyny has become prevalent in society. This patriarchal society, where women are considered merely as objects, Is treating entertainment as an equivalent. This mindset needs to change.

Tell people around you that they are not secondary but primary; They should be taught to respected from childhood, maybe then things will change. And I request you, if you come across any deepfake video, of any girl or anyone, report it; Otherwise, it might happen that tomorrow you see a video of your family member at home.

How Deepfake AI also damage carriers of Film Stars and Influencers?

Another significant harm of AI is reputation damage. Recently, an explicit video of Rashmika Mandanna surfaced; She was a popular celebrity, so it became known. But still, most people believe that she did it herself. She is receiving inappropriate comments, disrespectful comments. Similarly, Kim Kardashian, and Emma Watson, couldn’t even prove that those videos were not real.

People assumed that they are porn stars, and their videos were made popular in this way. Many girls are unable to reclaim their reputations. Preeti Zinta also had a video, where she didn’t even comment. So here, her image has been damaged; Many actresses have lost work, and their social image has been tarnished.

You might have heard about many actresses involved in sex tapes and such. It has had a significant impact on their careers, causing substantial image damage. And the third thing that scares me a lot is that there are high possibilities of conflicts in the future due to AI advancements. Because it is not known who said what and how.

How Deepfake AI Damaging Carriers of Politicians?

Let me first mention politicians here, who have suffered losses. Because through them, the conflicts in the country, which are a bad situation, can be fueled by their words. Donald Trump’s video, which was a deep fake video, aired on TV, Where he was directly telling his employees, You are fired, you are fire & You are fired, you are fired, you are fired….”

People were being fired from their jobs on TV And their employees revolted, questioning, “What does it mean on TV?” Following this error, it was eventually discovered that the video was a deepfake. Here in Turkey, the election changed completely. There were two parties in Turkey and a deep fake video was shown With a terrorist connected to one party, and the other party won the election.

Take the example of Ukraine, where a deep fake video of the Ukrainian president came out, Telling his soldiers to put down their weapons and go home. The situation quickly cleared, meet your family But if it had happened, what a bad situation it could have been. And Joe Biden, currently the President of the United States, is a regular target.

India is not safe, where Modi is shown only playing Gerba. But the future is going to be worse Because right now, they are just showing playing Gerba, but AI will develop a bit more. And I want to tell you a special thing when AI develops a bit more, Or even now, recommendation media will use it a lot to save your decision-making.

How Deepfake Videos Make Religious Issues?

In every country, there are 63 countries where cyber troops are used. They post in favor of the government in your feed, on Facebook, Instagram, Wherever you use it, and on YouTube, they post in your feed. In favor of the government, Hindu-Muslims, post something that can create your decision in favor of the government.

They create an echo chamber, So I am saying that when there are elections now or in a country where there are elections, Follow them in your feed, do not follow them, but still, in your feed, some deep fake videos will come. When Hindu-Muslim is happening, Someone popular will say something sensitive, Will say something in the name of religion, and a politician will say something.

These will be fake videos, and you won’t even recognize them. And in many small towns in the country, there may be riots, where technology is not that powerful. So, I would like to tell you that if you come across any deep fake videos, especially on sensitive issues, Talking about religion, then don’t trust them blindly.

Check it thoroughly at least 100 times before reacting to it. Don’t forward it. So, I request you not to engage in fights with your brothers over useless funds in a video that could be fake.

How Deepfake Videos use for Money Scam?

Deepfake Videos are becoming the most dangerous. And it will increase a lot now. Common people’s money is being looted.

There’s a guy from California, Martin Casado He went to Japan and called his dad from there, Saying that I had an accident with the car, so I need $10,000. You transfer it via NEFT. His father said okay and went to transfer the money. When he reached to transfer the money, he called his son again and asked what form to fill out? So when he called his son back and explained, what did his son say? Actually, there was no accident at all.

It turned out that the call he received was copied by AI. Martin Casado had never made any call; He saved $10,000 by chance, otherwise, he would have been looted. He was saved, but there’s a guy from Kerala, Radhakrishnan, who got looted. He received a call on WhatsApp from his friend, who was from Andhra Pradesh.

He called and said there was a problem in my family, and they needed money. There were problems in my family, I need ₹40,000, you give it to me, and I’ll return it to you quickly. He agreed, So, he said, “Okay, no problem” This talk was going on on video call and he Transferred ₹40,000 to him, he called his friend again On the same number, The call came from a different number, So he asked, ‘Did you get the money?’ He replied, ‘What are you talking about, which money?’ ‘I just sent it.’

Actually, that video call was a deep fake video call, swapped by AI It’s amazing, I mean, the man couldn’t even recognize that he wasn’t talking to his friend. This is a fraud, and it’s a registered fraud. Those who haven’t registered yet, who knows how many frauds are happening. So, the AI is copying your voice exactly, copying your face.

How to Detect/know Deepfake Videos?

Actually, it’s not 100% perfect. There’s always something that is not perfect. So I want to tell you that you can do a few things about it. When you’re watching a deep fake video or a video call, watching a face swap, It’s not 100% perfect.

There’s a slight jitter, it’s a bit dim, the resolution is bad, and Something strange happens, they won’t do it normally in good light, only in partial light. Or if someone calls you, it will be from an unknown number, so don’t believe it. So the point is, if you have to give money to someone, meet them personally, it’s better. Leave video calls and the like, don’t believe in them because AI will develop further.

And then you won’t be able to figure out what’s happening? Who is real? Who is fake? Who is fake, then you have to meet whoever you want, meet them personally, and save your money, AI is becoming more dangerous now, collaborating with the human mind. And I don’t blame AI; I blame humans. Singers’ voices are being copied, and face swaps are happening.

Conclusion

now this will become 100% perfect Tell your friends so that their money is saved, or the lives of those girls are saved. Because millions, millions and a half people will watch this video But those who can’t see it, or those influencers who have made the video. So, this information may not reach everyone, so it becomes your responsibility To tell those people that such problems are happening.

Poor people’s hard-earned money will be looted. And if you get any such call, from your relative, Asking for ₹40,000, ₹50,000, Then you have to say to them… Aa… Thu… According to the Report of MIT technology Review